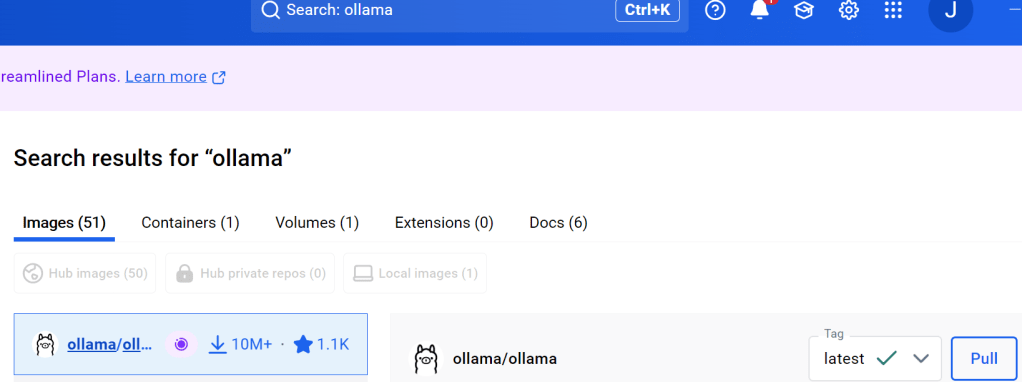

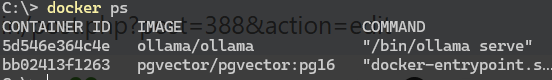

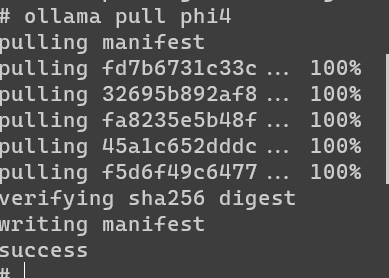

In Part 1 and Part 2, we set up our infrastructure: a PostgreSQL database with pgvector for storing embeddings, and Ollama running the Phi4 model locally. Now we’ll create a .NET application to bring these components together into a working RAG system. We’ll start by setting up our solution structure with proper test projects.

If you’re just joining, you’ll need to follow the steps in Parts 1 and 2 first to get PostgreSQL and Ollama running in Docker.

Let’s start by creating the .NET solution and projects from the command line (we could do this through Visual Studio’s UI, but I prefer keeping my command-line-fu strong).

Creating a new .NET project

Open PowerShell as an administrator. Browse to a directory where you’d like to create your solution – I keep mine in my Git repositories folder. Then run these commands:

First, create the solution

dotnet new sln -n LocalRagConsoleDemoYou might see a prompt about the dev certificate – if so, run:

dotnet dev-certs https --trustNow create our three projects: the main console app and two test projects

dotnet new console -n LocalRagConsoleDemo

dotnet new nunit -n LocalRagConsoleUnitTests

dotnet new nunit -n LocalRagConsoleIntegrationTestsFinally, add everything to the solution, organizing tests in their own folder

dotnet sln LocalRagConsoleDemo.sln add LocalRagConsoleDemo/LocalRagConsoleDemo.csproj

dotnet sln LocalRagConsoleDemo.sln add LocalRagConsoleUnitTests/LocalRagConsoleUnitTests.csproj --solution-folder "Unit Tests"

dotnet sln LocalRagConsoleDemo.sln add LocalRagConsoleIntegrationTests/LocalRagConsoleIntegrationTests.csproj --solution-folder "Unit Tests"Now we have a solution set up with proper separation of concerns – a main console project for our RAG application and separate projects for unit and integration tests. In the next section, we’ll add the required NuGet packages and start building our connection to PostgreSQL.

You can verify everything is set up correctly by opening LocalRagConsoleDemo.sln in Visual Studio or your preferred IDE. You should see:

Two test projects organized in a “Unit Tests” solution folder and a main console project.

Adding Dependencies

Navigate to the LocalRagConsoleDemo project folder and add our initial NuGet packages:

dotnet add package Microsoft.Extensions.Http

dotnet add package Newtonsoft.JsonAt this point, you should have a working solution structure:

- A main console project

- Two test projects in a “Unit Tests” solution folder

- Basic HTTP and JSON handling capabilities

You can verify the setup by opening LocalRagConsoleDemo.sln in Visual Studio or your preferred IDE.

What’s Next?

I’ll be back soon with a detailed walkthrough of building the RAG application. In the meantime, you can check out the working code at:

https://github.com/jimsowers/LocalRAGConsoleDemo

Happy Coding!

-Jim