In Part 1, we set up a PostgreSQL database with pgvector extension in a Docker container (that’s a mouthful of tech terms – sorry!).

Our goal is to build a local RAG (Retrieval-Augmented Generation) application combining Postgres for vector storage with a local AI model. Now, we’ll use Docker to run Ollama, an open-source tool that lets us run AI models locally on our machine.

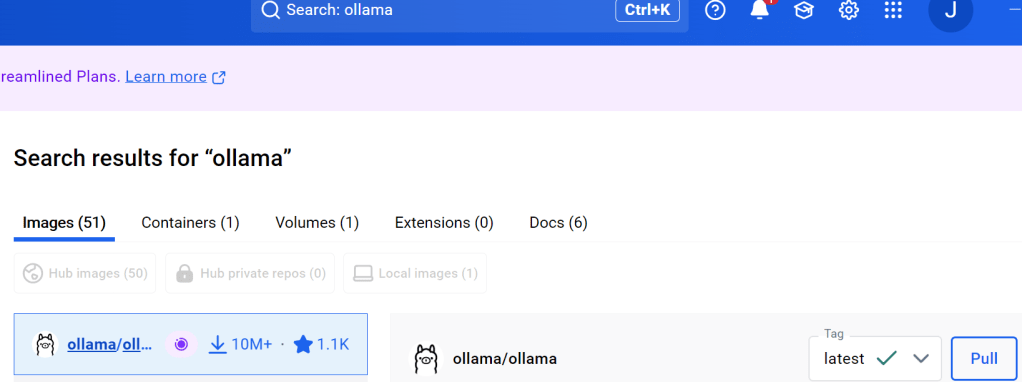

Step 1: Pulling the Ollama Docker container

You can pull the ollama image in a powershell prompt from docker hub with this command:

docker pull ollama/ollamaOr, you can use the search bar in docker desktop :

We already should have Docker running with Postgres with pgvector and now we will start the Ollama container also.

Step 2: Starting the Ollama container

Before you start Ollama, you should read the Docker Hub docs about starting it correctly for your video card / computer settings https://hub.docker.com/r/ollama/ollama. You click run on the container in Docker desktop, but if you use the proper startup settings for the GPU you have, it may run much much better.

For instance, I have used this startup from a command line:

docker run --name ollamaLocal --hostname=ollamaLocal --env=CUDA_VISIBLE_DEVICES=0 --env=GPU_MEMORY_UTILIZATION=90 --env=GPU_LAYERS=35 --env=NVIDIA_DRIVER_CAPABILITIES=compute,utility --env=PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin --env=OLLAMA_HOST=0.0.0.0 --env=LD_LIBRARY_PATH=/usr/local/nvidia/lib:/usr/local/nvidia/lib64 --env=NVIDIA_VISIBLE_DEVICES=all --volume=ollama:/root/.ollama --network=bridge -p 11434:11434 --restart=no --label='org.opencontainers.image.ref.name=ubuntu' --label='org.opencontainers.image.version=22.04' --runtime=runc -d sha256:f1fd985cee59a6403508e3ba26367744eafad7b7383ba960d80872aae61661b6

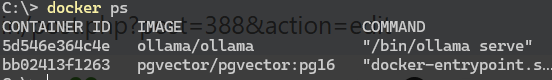

Check that Docker is running with this command:

docker psThat should return something like this:

showing both Ollama and Postgres with pgvector are running.

To see if you already have models installed, you can execute the following command in PowerShell (please note that I did not include the full container ID—only enough characters to uniquely identify it: ‘5d5’—and typically, three characters are enough):

docker exec -it ollamaLocal ollama list

In this container, I already have phi4, deepseek-r1, and llama3.

For this project , I want to use Phi4 from Microsoft to start.

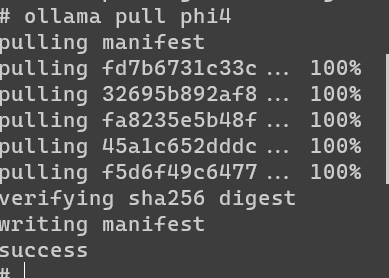

We will use the docker exec command to open an interactive terminal:

docker exec -it ollamaLocal /bin/shThen the pull command in the docker terminal:

ollama pull phi4

Step 3: Check that Phi4 is running in Ollama:

Staying in the interactive terminal (at the # prompt) – run this command:

ollama run phi4 "Tell me a joke about a duck and a pencil"You should get a response from the phi4 model something like this:

To get out of the interactive terminal, type exit of Ctrl+D.

There you go – if all that went well, you have the phi4 language model running locally! Let’s review what we’ve accomplished:

- Postgres with pgvector is running in a Docker container

- Ollama is running with GPU optimization

- Microsoft’s Phi4 model is ready to use

In the next articles, we’ll build a .NET application that ties these pieces together. We’ll cover generating embeddings from our documents, storing them in pgvector, and using Phi4 to answer questions based on our stored knowledge.

If you need to stop the containers, you can use docker stop with your container IDs, or use Docker Desktop to stop them.